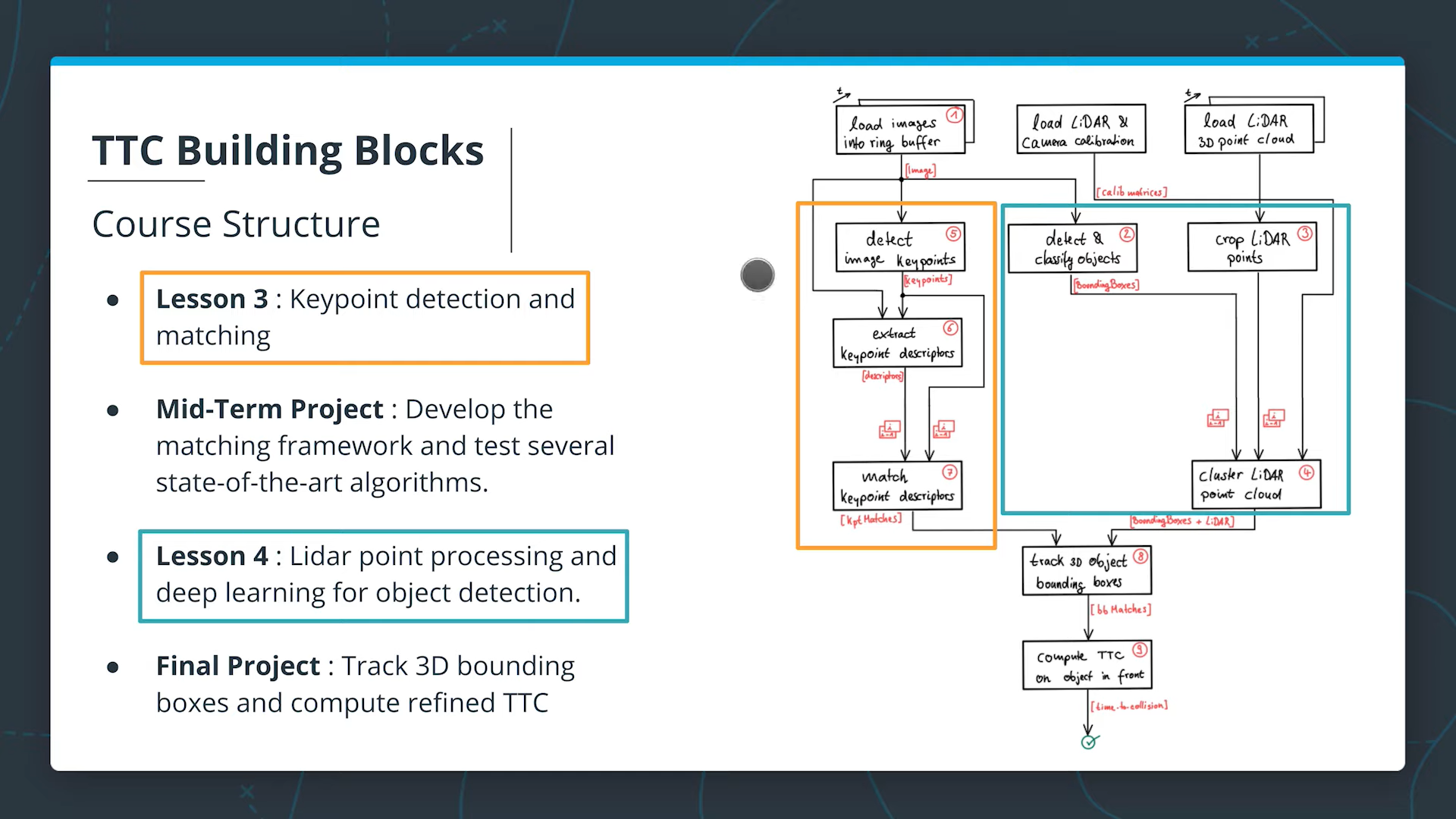

Course Structure

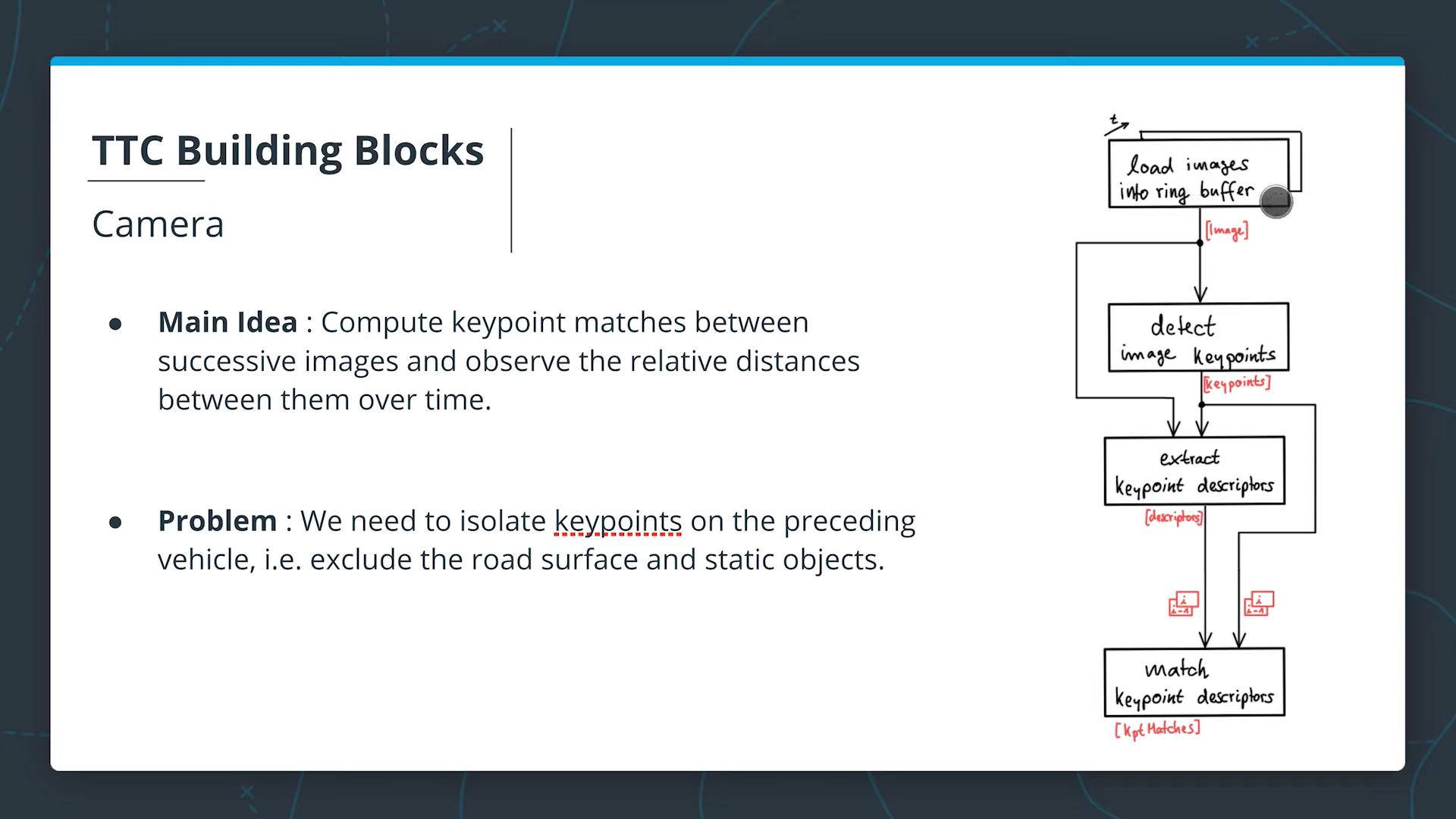

- Ring buffer gives us images at each point in time.

- Image key point detection (in the second block) can be done using various methods, all all methods return a set of keypoints.

- Extract keypoints descriptors: The idea is around every keypoint location, a key point descriptor can be computed which reflects properties around this keypoint which is used to uniquely identify the keypoint in the image using its local neighborhood.

- The output of Match Keypoint Descriptors block is a set of keypoint matches for two successive images, a number of keypoints which are found in one image and then the corresponding partners in the successive image. This enables us to calculate the TTC.

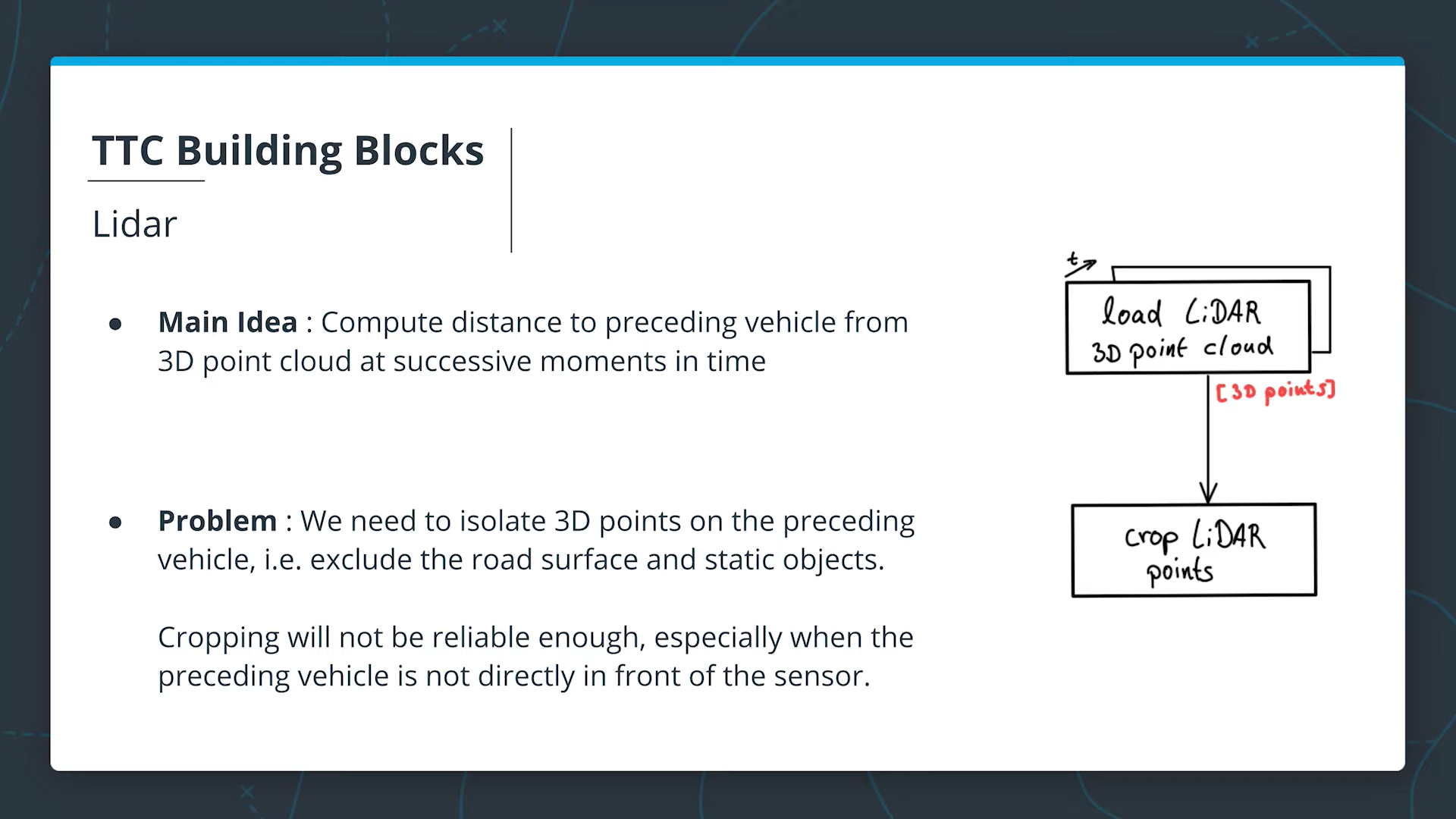

- Excluding the road surface can be done by saying that all points below a certain height (surely above the road surface) are removed.

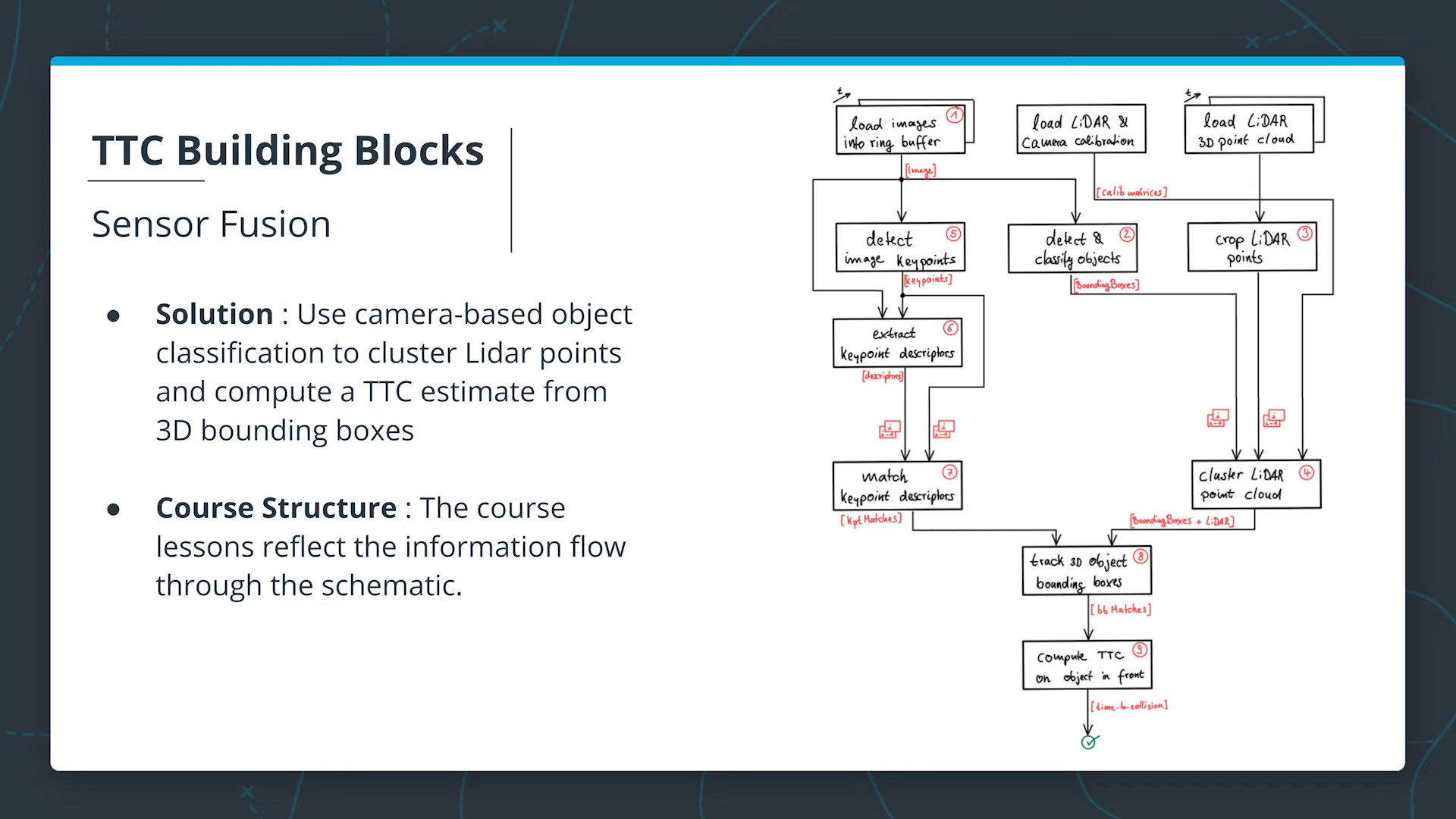

Complete Diagram

- The output of block 4 is exclusive for each frame. This means that if you have 100 frames, each frame will be associated with its bounding boxes + lidar points. For example: Frame 1 → 15 bounding boxes + lidar points Frame 2 → 11 bounding boxes + lidar points ...etc

- To measure their relative motion and also dimensions change, we need to connect the bounding boxes. This is done with the help of the camera with the help of their matched descriptors. We track bounding boxes in space and calculate TTC for each object in front of us.

Key points:

- you need to learn how to find them

- how to describe them in a unique way

- how to find them again in another image

Early Fusion vs Late Fusion

In Early Fusion: The fusion algorithm has access to raw data of the camera, lidar, ... and processes everything together to produce one final output.

In Late Fusion: Every sensor basically tries its best on its own first. Track objects in camera, track objects in lidar, track objects in radar, .. and then you do track to track fusion in the very end (combining all the tracks to a final output).

💡

However, there is a spectrum in between, you can also fuse on levels lower than the late fusion and higher than early fusion.