Introduction to LiDAR and Point Clouds

In Sensor Fusion, by combining lidar’s high resolution imaging with radar's ability to measure velocity of objects we can get a better understanding of the surrounding environment than we could using one of the sensors alone.

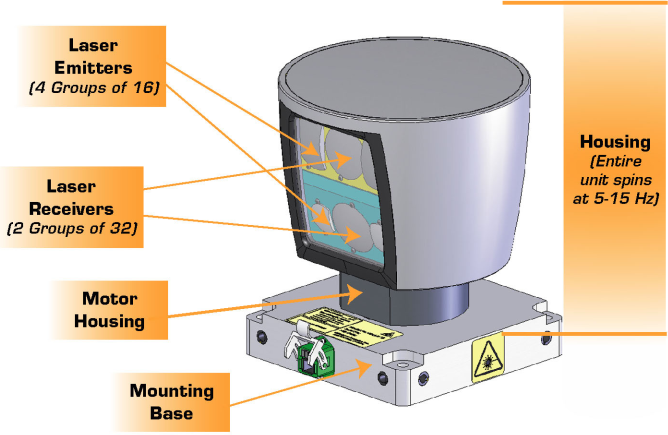

- Lidar: Light Detection and Ranging. A Lidar contains a laser, a method of deflection is used. Basically, scanning the laser beam across the field of view and a photo detector is used to detect photons reflected from objects. You get for each point in the field of view a distance to an object, or no distance if there is no object. The laser sends out a very short pulse (few nanoseconds).

- Lidars are currently very expensive, upwards of $60,000 for a standard unit.

- The Lidar sends thousands of laser rays at different angles.

- Laser gets emitted, reflected off of obstacles, and then detected using a receiver.

- Based on the time difference between the laser being emitted and received, distance is calculated.

- Laser intensity value is also received and can be used to evaluate material properties of the object the laser reflects off of.

Velodyne lidar sensors, with HDL 64, HDL 32, VLP 16 from left to right. The larger the sensor, the higher the resolution.

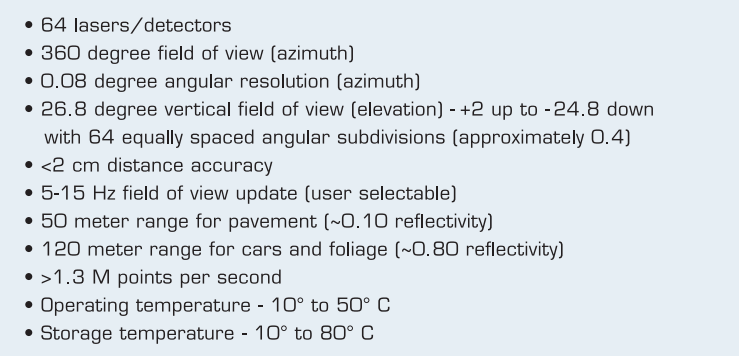

Here are the specs for a HDL 64 lidar. The lidar has 64 layers, where each layer is sent out at a different angle from the z axis, so different inclines. Each layer covers a 360 degree view and has an angular resolution of 0.08 degrees. On average the lidar scans ten times a second. The lidar can pick out objects up to 120M for cars and foliage, and can sense pavement up to 50M.

- With 64 layers, an angular resolution of 0.09 degrees, and average update rate of 10Hz the sensor collects (64x(360/0.08)x10) = 2,880,00 cloud points/second

Types of lidars: 1. Lidar uses micro-mirrors or larger mirrors to scan the laser beam across the FOV. 2. Solid State Lidars use phased array principle where phase difference is used to steer the beam. 3. Lidars that use dispersion relationship and prisms

The power that Lidar uses depends on few things. What is more interesting is the output power of the laser, because that depends on the wave length, and this is correlated with eye safety. A laser in 905 nm range, that uses about 2 milli-watts. A laser in 1500 nm range, uses up to 10 times more power = so it can actually reach further than the short wave length, and be as eye safe as the other one, but it has more expensive components.

Eye safe means that you can look into the laser beam without it hurting your eyes. The class of lasers that is used in automotive lidars is class one.

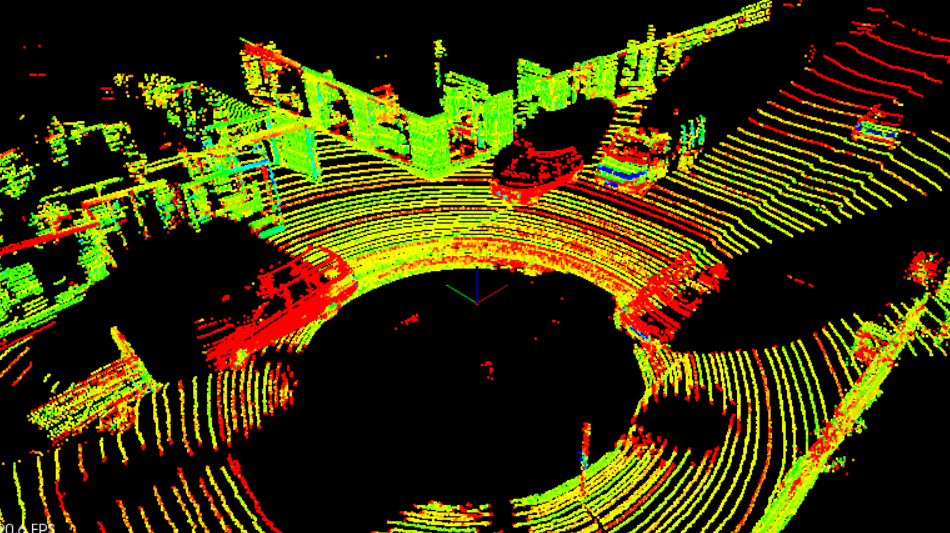

Point Cloud

A set of all lidar reflections that are measured. Each point is a laser beam that is going to the object and reflected from it.

The data that the lidar generates depends on the principle of the lidar field (number of layers in the lidar, ...), but roughly a 100 MB/sec.

Point Cloud Data (PCD) file

- (x,y,z,I), (x,y,z,I), ...

- I (Intensity) tells us about the reflective properties of the material.

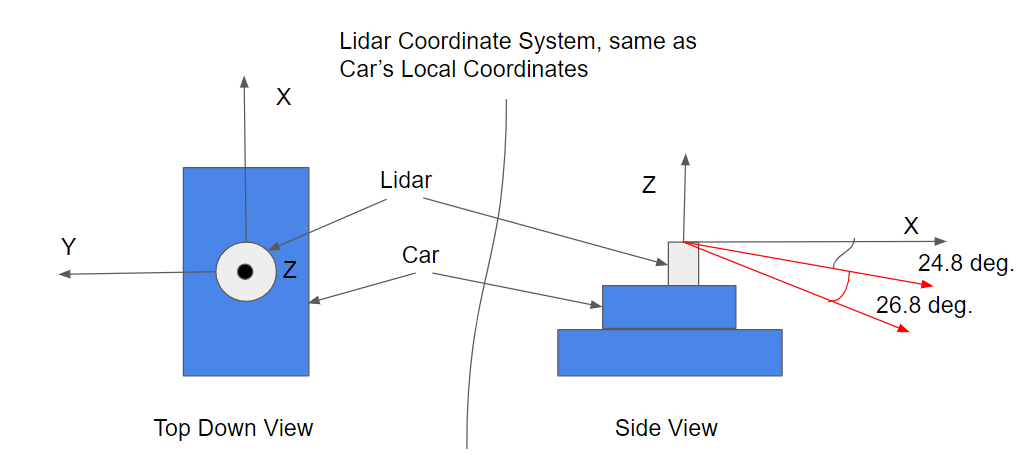

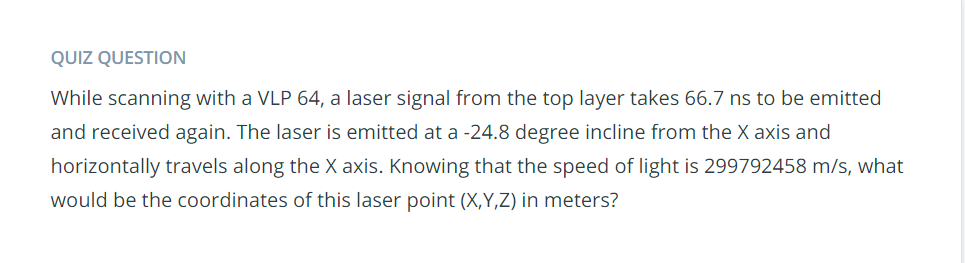

First the distance of the ray is calculated. Iit takes 66.7 ns to do a round trip, so it takes half that time to reach the object. The distance of the ray is then 299792458 (66.7/2) 10e-9 = 10 meters. The ray is traveling along the X-axis so the Y component is 0. The X, and Z components can be calculated by applying some Trig, X component = 10m sin(90-24.8) = 9.08 , Z component = 10m -cos(90-24.8) = -4.19.

Point Cloud Library (PCL)

PCL is an open source C++ library for working with point clouds, which is used to visualize data, render shapes, and other helpful built in processing functions. PCL is widely used in the robotics community for working with point cloud data, and there are many tutorials available online for using it. There are a lot of built in functions in PCL that can help to detect obstacles. Built in PCL functions that will be used later in this module are Segmentation, Extraction, and Clustering.

Lidars are mounted on the roof to maximize FOV. But it will not going to see anything that is happening close to the vehicle down to the bottom. To cover all the FOV, we mount multiple lidars in different places (where we have gaps in the overall sense of coverage).

Vertical Field of view: most of lidars have the same vertical field of view (independent of the number of layers) which is approximately 30 degrees. if you have 30 degrees and 16 layers, then you have 2 degrees spacing between each 2 layers in the image. Two degrees in about 60 meters, you can hide a pedestrian between 2 layers, so that limits actually your resolution and limits in a sense how far you can see. Because if you have no scan layer on an object, then this object is invisible to the lidar. The more layers you have in the vertical field of view, the finer the granularity is, the more objects you can see, and the further you can see.

Granularity of a lidar:

PCL Viewer

It handles all the graphics for us.

The viewer is usually passed in as a reference. That way the process is more streamlined because something doesn't need to get returned.

How to represent a LiDAR in a simulator?

Modeling lidar in a simulator is useful, because you don't have to make many assumptions. Lidar is represented by multiple beams that you can do ray tracing in real time using GPUs. The environment in a simulator is only an approximation of the real world in terms of reflectivity and material properties.

Note: The lidar arguments are necessary for modeling ray collisions. The Lidar object is going to be holding point cloud data which could be very large. By instantiating on the heap, we have more memory to work with than the 2MB on the stack. However, it takes longer to look up objects on the heap, while stack lookup is very fast.

Templates

- PCL uses templates for different point clouds. eg: PointXYZ (plain 3D), PointXYZI (including intensity), PointXYZRGP (including color), ...

- Templates allows us to write reusable code (instead of defining separate functions with different arguments for each type of point cloud, we can automate this process using templates. With templates, you only have to write the function once and use the template like an argument to specify the point type).

typenameis used whenever a pointer is used that depends on a template. The reason for this is the following: Given a piece of code with a type name parameter, likepcl::PointCloud<PointT>::Ptr, the compiler is unable to determine if the code is a value or a type without knowing the value for the type name parameter. The compiler will assume that the code represents a value. If the code actually represents a typename, you will need to specify that.PointT: a variable that can hold the place of any of theses different kinds of point clouds.

Is

pcl::PointCloud<PointT>::Ptra value or a type?The point cloud is using

boost::shared_pointerwhich is actually a type def. This is a type.

- Any place that you have ::Ptr extension of pointer on PCL pointCloud templates, you want to use typename.

LiDAR Parameters

- Resolution (Number of vertical layers) As there number increase, the resolution increase.

- Horizontal Layer Increment (Angular resolution around z-axis) When this decreases, we get better resolution.

- Minimum Distance Some lasers hit the roof of ego car. Those don't tell you too much about the environment. Filter this out by saying if a point is too close to the LiDAR sensor, then we assume it is just the roof of the car and we don't worry about it.

- Add Noise This will create some more interesting PCD data, and allow you to have more robust algorithms for o=processing this data.

Visualize PCD Data

We use renderCloud instead of renderRays.

→ By default rendering of Point Cloud is done in white.

→ Enables you to give a name to the point cloud. This way you can have multiple point clouds showing up in the viewer, and each one can be identified.

Flag in environment.cpp renderScene

if you want to render point cloud by itself without any of the cars in the street.