Harris Corner Detection

Looks for areas of significant change in the image by evaluating a local neighborhood around each pixel and based on this concept, Harris defined a so-called colonists measure, which takes the image gradients as input and provides a number as output that is high at locations where there is change in all directions.

Local Measures of Uniqueness

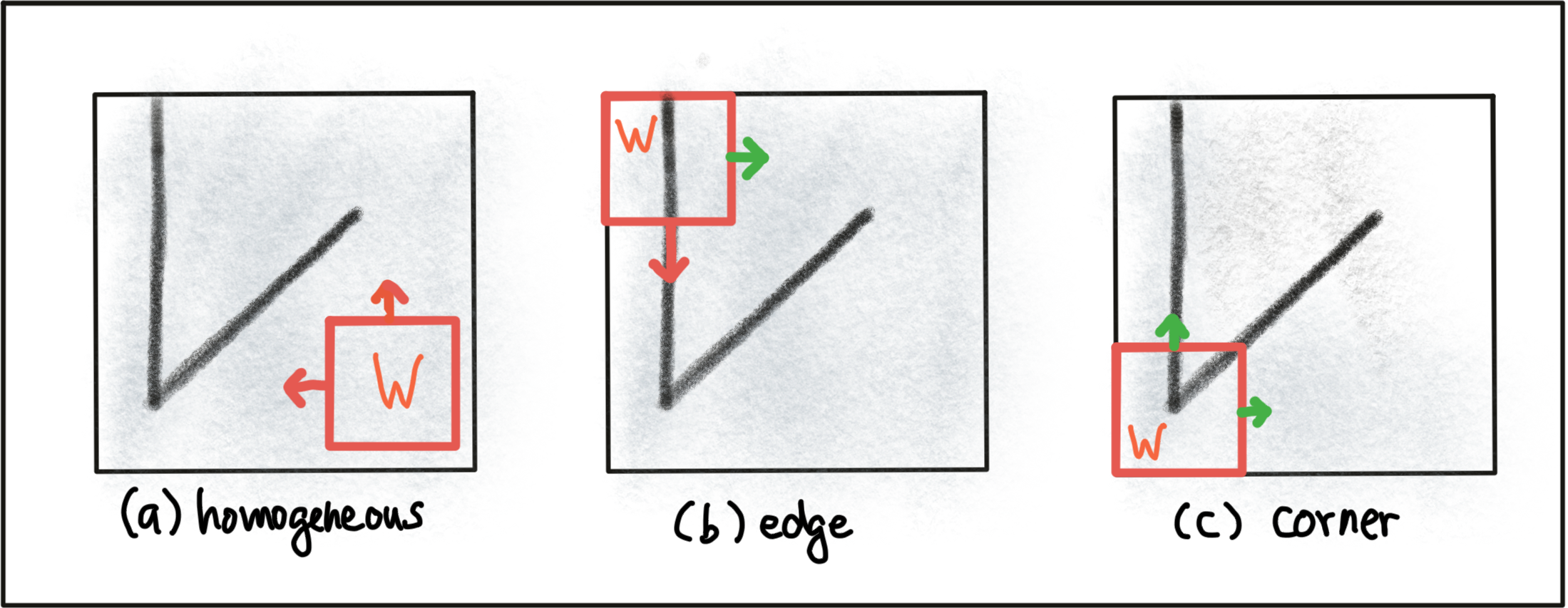

The idea of keypoint detection is to detect a unique structure in an image that can be precisely located in both coordinate directions. As discussed in the previous section, corners are ideally suited for this purpose. To illustrate this, the following figure shows an image patch which consists of line structures on a homogeneously colored background. A red arrow indicates that no unique position can be found in this direction. The green arrow expresses the opposite. As can be seen, the corner is the only local structure that can be assigned a unique coordinate in x and y.

In order to locate a corner, we consider how the content of the window would change when shifting it by a small amount. For case (a) in the figure above, there is no measurable change in any coordinate direction at the current location of the red window W whereas for (b), there will be significant change into the direction orthogonal to the edge and no change when moving into the direction of the edge. In case of (c), the window content will change in any coordinate direction.

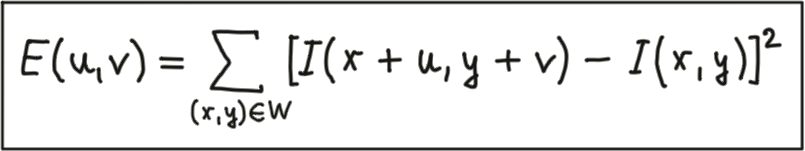

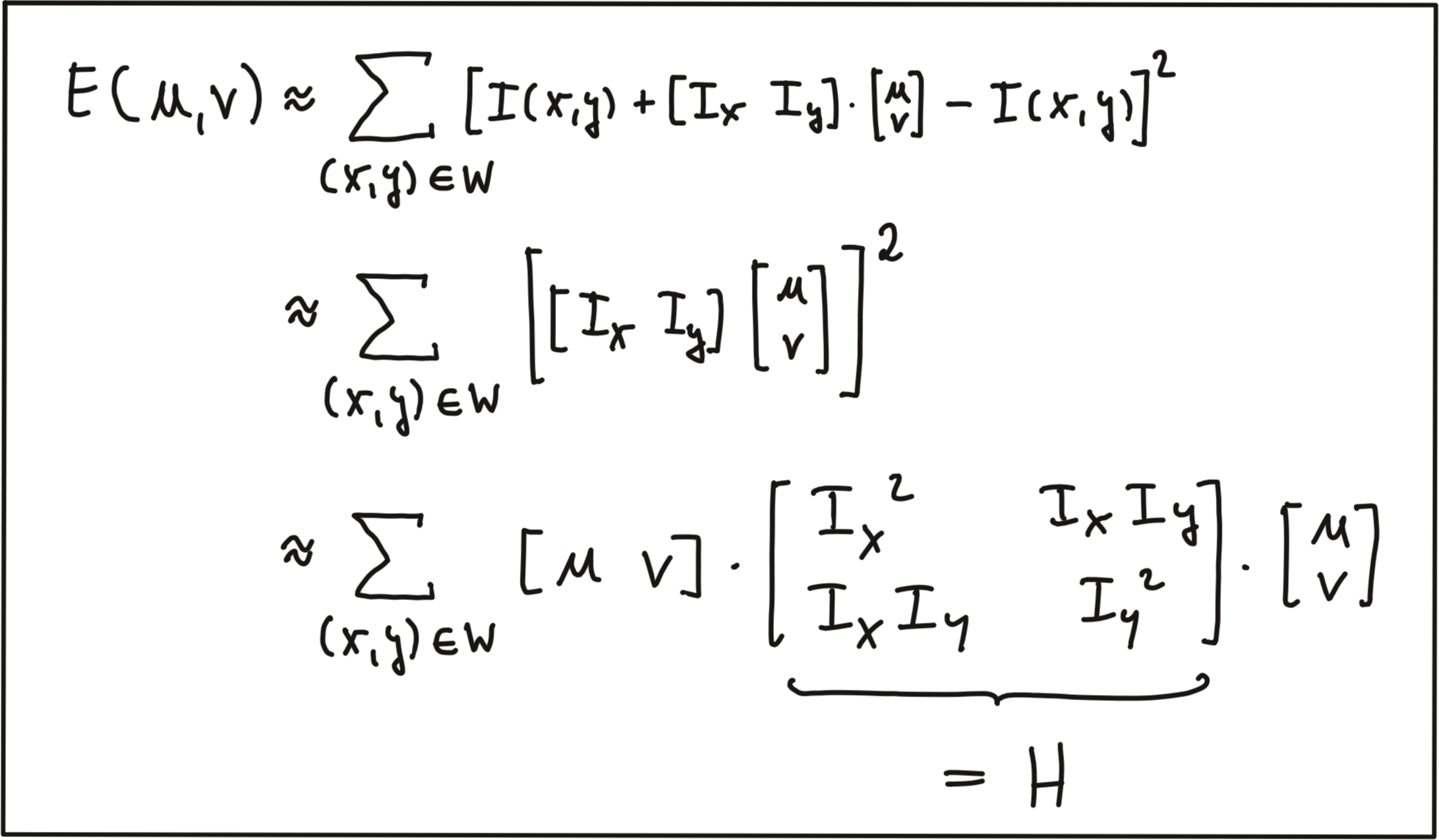

The idea of locating corners by means of an algorithm is to find a way to detect areas with a significant change in the image structure based on a displacement of a local window W. Generally, a suitable measure for describing change mathematically is the sum of squared differences (SSD), which looks at the deviations of all pixels in a local neighborhood before and after performing a coordinate shift. The equation below illustrates the concept.

After shifting the Window W by an amount u in x-direction and v in y-direction the equation sums up the squared differences of all pixels within W at the old and at the new window position. In the following we will use some mathematical transformations to derive a measure for the change of the local environment around a pixel from the general definition of the SSD.

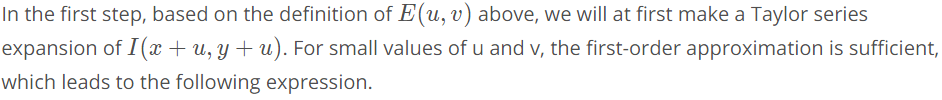

The derivation of the image intensity II both in x- and y-direction is something you have learned in the previous section already - this is simply the intensity gradient . From this point on, we will use the shorthand notation shown above to express the gradient.

In the second step, we will now insert the approximated expression of I(x+u, y+v)I(x+u,y+v) into the SSD equation above, which simplifies to the following form:

The result of our mathematical transformations is a matrix H, which can now be conveniently analyzed to locate structural change in a local window W around every pixel position u,v in an image. In the literature, the matrix H is often referred to as covariance matrix.

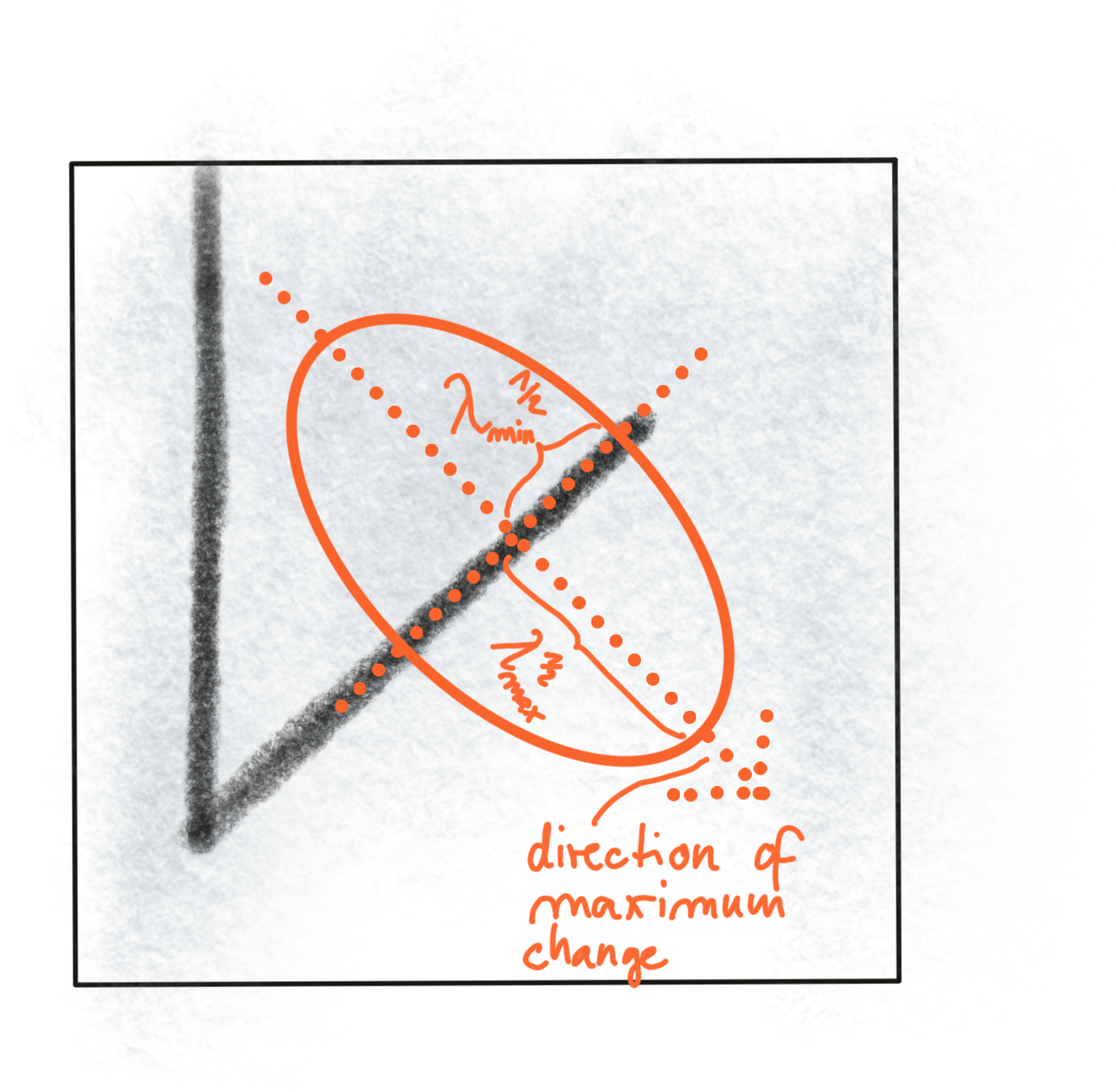

To do this, it helps to visualize the matrix H as an ellipse, whose axis length and directions are given by its eigenvalues and eigenvectors. As can be seen in the following figure, the larger eigenvector points into the direction of maximal intensity change, whereas the smaller eigenvector points into the direction of minimal change. So in order to identify corners, we need to find positions in the image which have two significantly large eigenvalues of H.

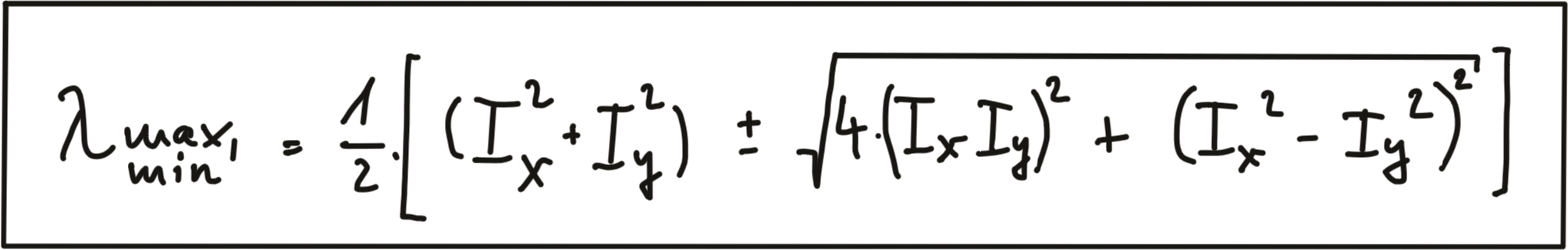

Without going into details on eigenvalues in this course, we will look at a simple formula of how they can be computed from H:

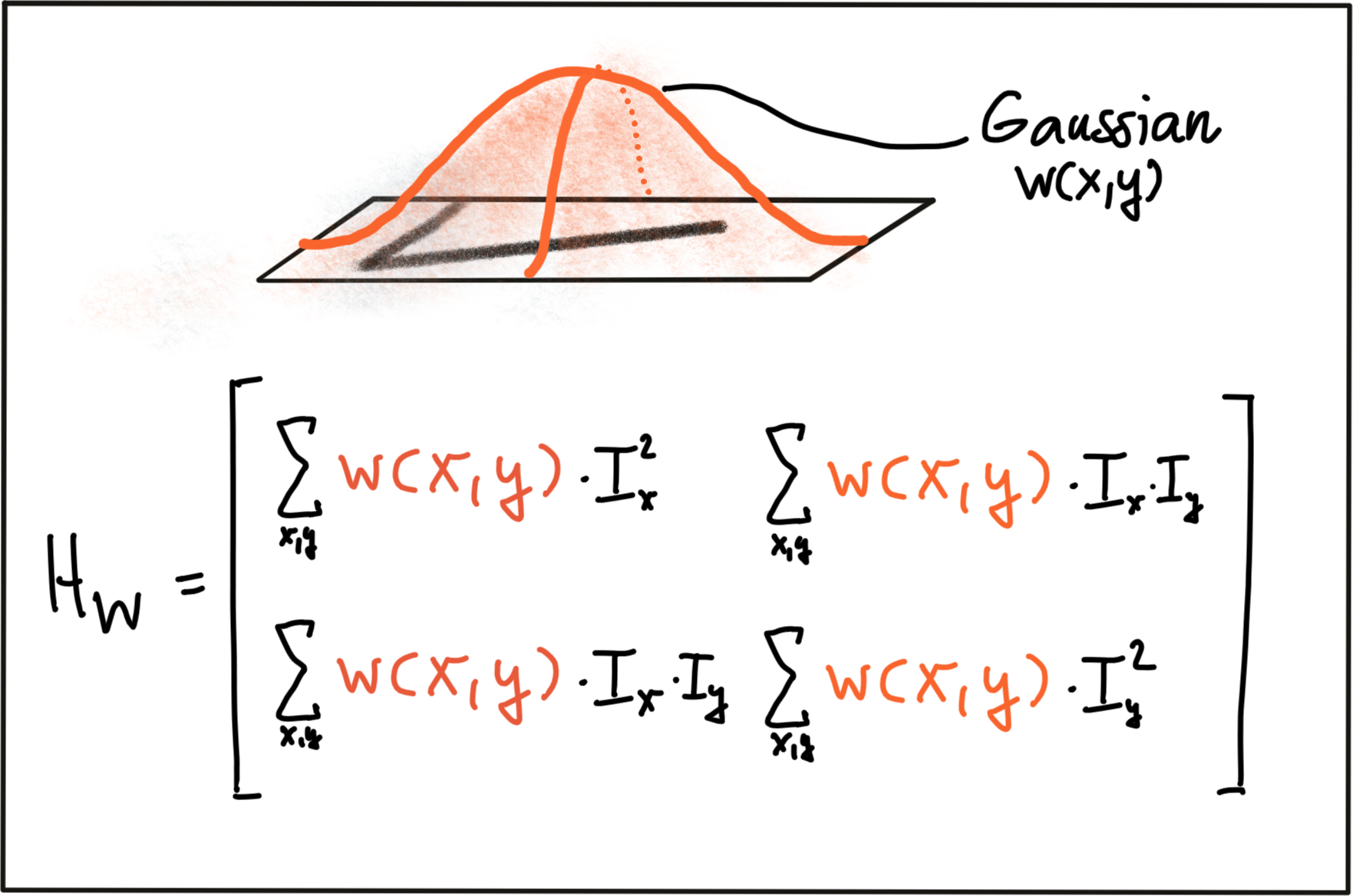

In addition to the smoothing of the image before gradient computation, the Harris detector uses a Gaussian window w(x,y) to compute a weighted sum of the intensity gradients around a local neighborhood. The size of this neighborhood is called scale in the context of feature detection and it is controlled by the standard deviation of the Gaussian distribution.

As can be seen, the larger the scale of the Gaussian window, the larger the feature below that contributes to the sum of gradients. By adjusting scale, we can thus control the keypoints we are able to detect.

The Harris Corner Detector

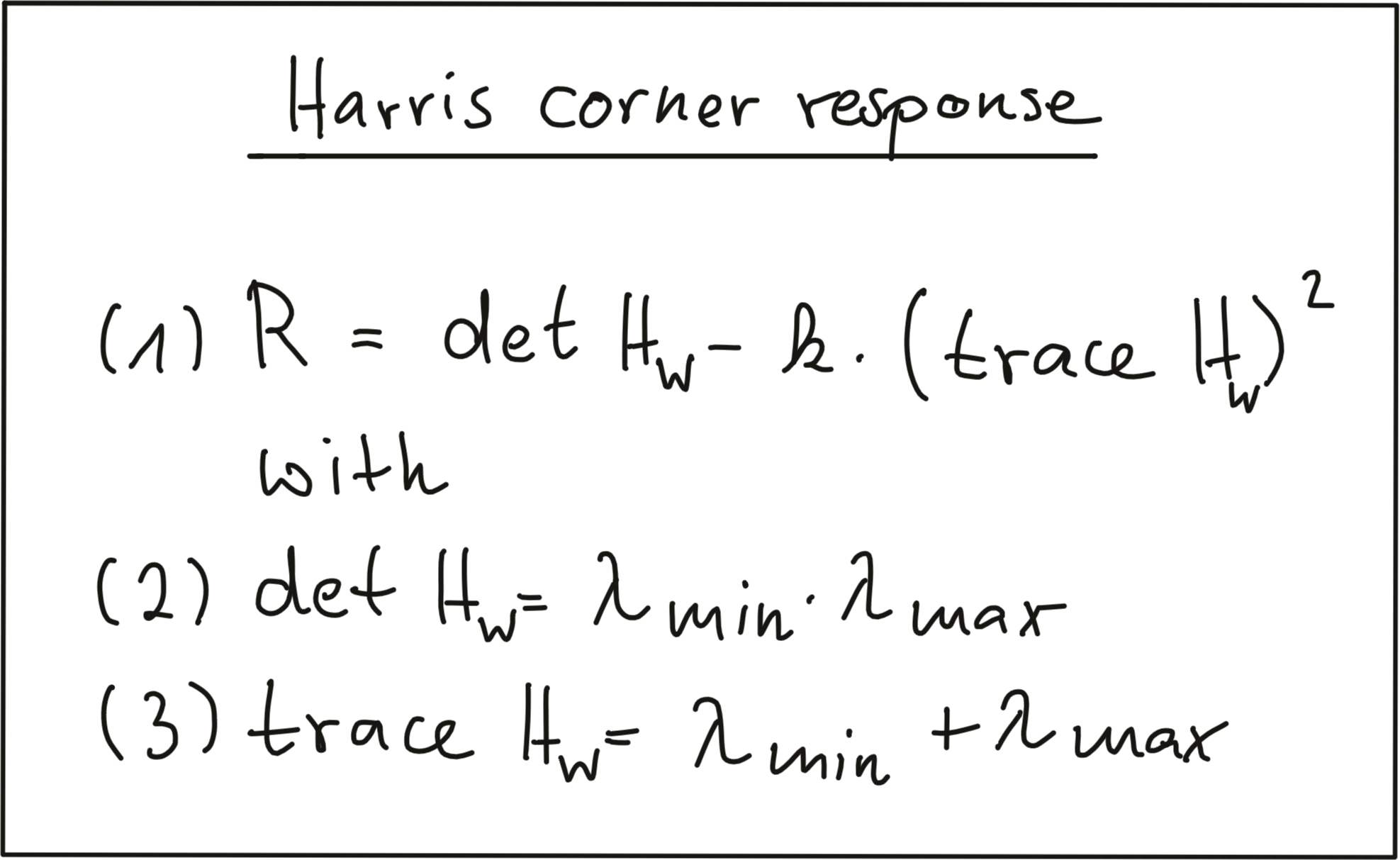

Based on the eigenvalues of H, one of the most famous corner detectors is the Harris detector. This method evaluates the following expression to derive a corner response measure at every pixel location with the factor k being an empirical constant which is usually in the range between k = 0.04 - 0.06.

Based on the concepts presented in this section, the following code computes the corner response for a given image and displays the result.

// load image from file

cv::Mat img;

img = cv::imread("./img1.png");

// convert image to grayscale

cv::Mat imgGray;

cv::cvtColor(img, imgGray, cv::COLOR_BGR2GRAY);

// Detector parametersint blockSize = 2; // for every pixel, a blockSize × blockSize neighborhood is consideredint apertureSize = 3; // aperture parameter for Sobel operator (must be odd)int minResponse = 100; // minimum value for a corner in the 8bit scaled response matrixdouble k = 0.04; // Harris parameter (see equation for details)// Detect Harris corners and normalize output

cv::Mat dst, dst_norm, dst_norm_scaled;

dst = cv::Mat::zeros(imgGray.size(), CV_32FC1 );

cv::cornerHarris( imgGray, dst, blockSize, apertureSize, k, cv::BORDER_DEFAULT );

cv::normalize( dst, dst_norm, 0, 255, cv::NORM_MINMAX, CV_32FC1, cv::Mat() );

cv::convertScaleAbs( dst_norm, dst_norm_scaled );

// visualize resultsstring windowName = "Harris Corner Detector Response Matrix";

cv::namedWindow( windowName, 4 );

cv::imshow( windowName, dst_norm_scaled );

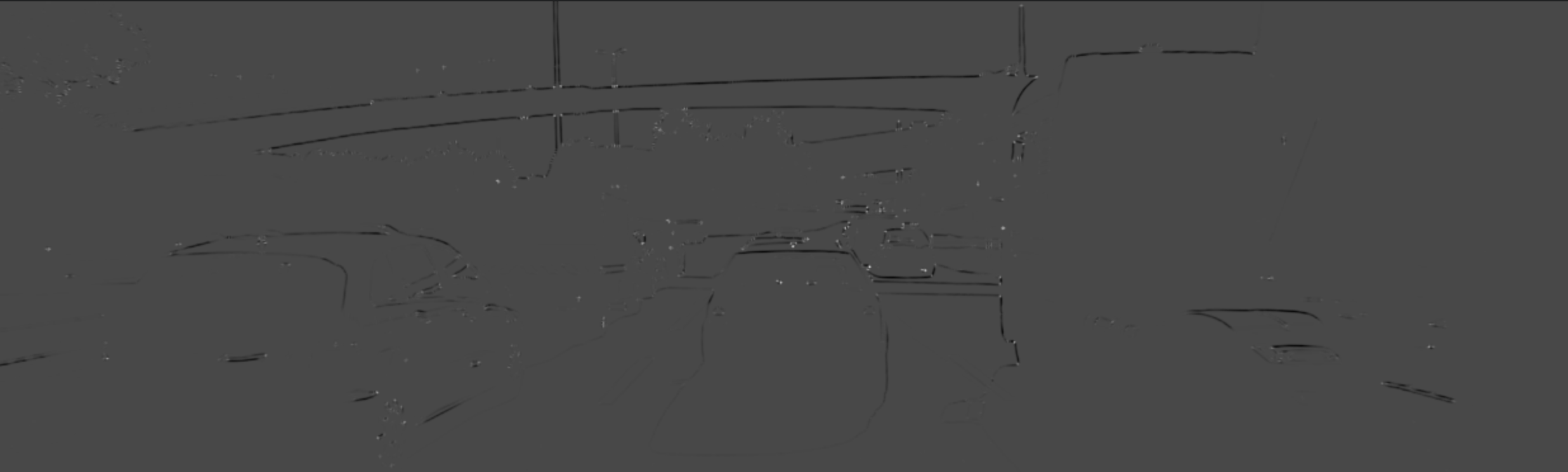

cv::waitKey(0);The result can be seen below : The brighter a pixel, the higher the Harris corner response.

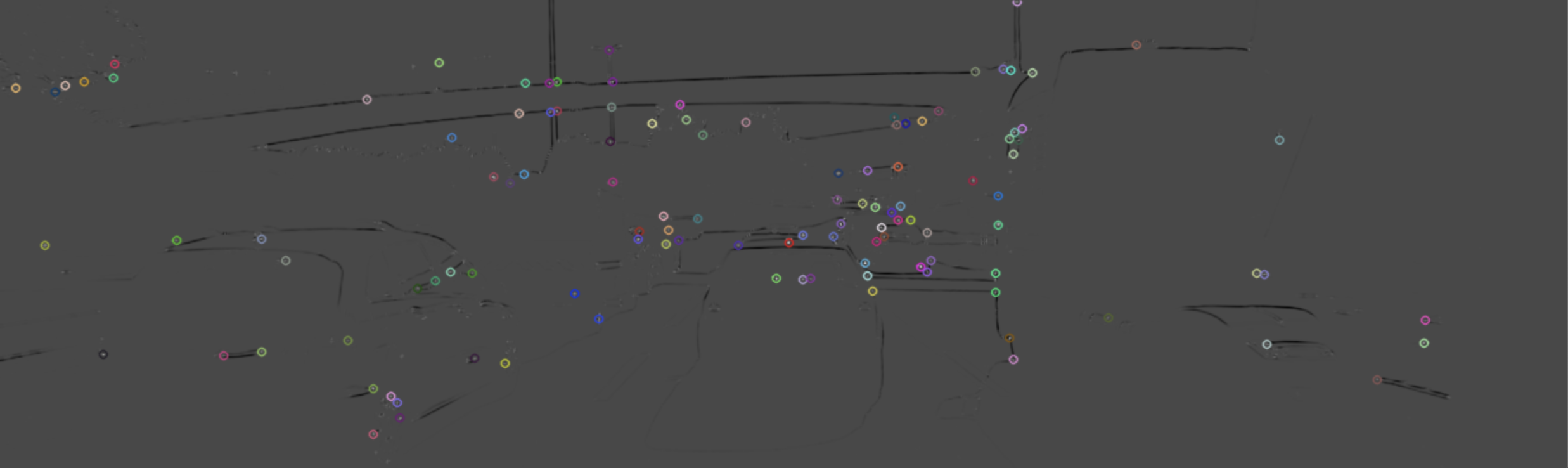

So output of Harris detector is basically a collection of light and dark patches. Now, we need to locate keypoints in the image that satisfy 2 conditions:

- They give the highest columnist in a local neighborhood.

- They should not overlap too much with other keypoints around them to avoid too many points clustering in a small neighborhood.

Such a technique is called Non-maximum suppression (NMS).

Applying NMS will result in the following image:

CV Library

- cv::Mat → rows, cols

- matrixName.at(x,y) → returns the reference to the element present in that location.

- cv::KeyPoint → pt → Data structure for salient point detectors.

cv::KeyPoint newKeyPoint;

newKeyPoint.pt = cv.Point2f(i, j); // coordinates of the keypoints

newKeyPoint.size = 2 * apertureSize; // diameter of the meaningful keypoint neighborhood

newKeyPoint.response = response; // the response by which the most strong keypoints have been selected.

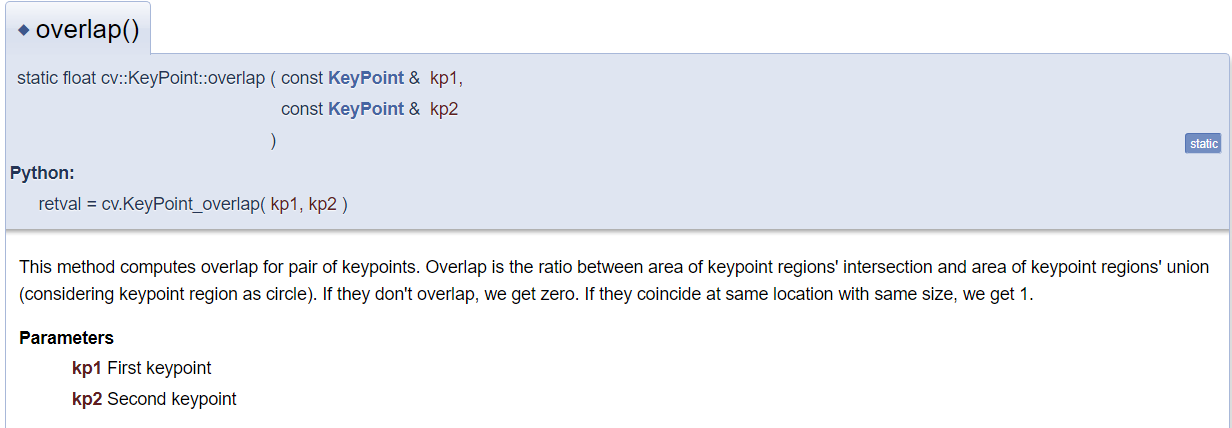

→ Very similar to IOU (intersection over Union) which is used in bounding boxes with neural networks. 🙂